Source (Bluesky)

Transcript

recently my friend’s comics professor told her that it’s acceptable to use gen Al for script- writing but not for art, since a machine can’t generate meaningful artistic work. meanwhile, my sister’s screenwriting professor said that they can use gen Al for concept art and visualization, but that it won’t be able to generate a script that’s any good. and at my job, it seems like each department says that Al can be useful in every field except the one that they know best.

It’s only ever the jobs we’re unfamiliar with that we assume can be replaced with automation. The more attuned we are with certain processes, crafts, and occupations, the more we realize that gen Al will never be able to provide a suitable replacement. The case for its existence relies on our ignorance of the work and skill required to do everything we don’t.

This actually relates, in a weird but interesting way, to how people get broken out of conspiracy theories.

One very common theme that’s reported by people who get themselves out of a conspiracy theory is that their breaking point is when the conspiracy asserts a fact that they know - based on real expertise of their own - to be false. So, like, you get a flat-earther who is a photography expert and their breaking point is when a bunch of the evidence relies on things about photography that they know aren’t true. Or you get some MAGA person who hits their breaking point over the tariffs because they work in import/export and they actually know a bunch of stuff about how tariffs work.

Basically, whenever you’re trying to disabuse people of false notions, the best way to start is always the same; figure out what they know (in the sense of things that they actually have true, well founded, factual knowledge of) and work from there. People enjoy misinformation when it affirms their beliefs and builds up their ego. But when misinformation runs counter to their own expertise, they are forced to either accept that they are not actually an expert, or reject the misinformation, and generally they’ll reject the misinformation, because accepting they’re not an expert means giving up on a huge part of their identity and their self-esteem.

It’s also not always strictly necessary for the expertise to actually be well founded. This is why the Epstein files are such a huge danger to the Trump admin. A huge portion of MAGA spent the last decade basically becoming “experts” in “the evil pedophile conspiracy that has taken over the government”, and they cannot figure out how to reconcile their “expertise” with Trump and his admin constantly backpedalling on releasing the files. Basically they’ve got a tiny piece of the truth - there really is a conspiracy of powerful elite pedophiles out there, they’re just not hanging out in non-existent pizza parlour basements and dosing on adrenochrone - and they’ve built a massive fiction around that, but that piece of the truth is still enough to conflict with the false reality that Trump wants them to buy into.

You get a flat-earther who is a photography expert and their breaking point is when a bunch of the evidence relies on things about photography

Or you get a demolitions expert to watch a video of WTC7

AI only seems good when you don’t know enough about any given topic to notice that it is wrong 70% of the time.

This is concerning when CEOs and other people in charge seem to think it is good at everything, as this means they don’t know a god damn thing about fuck all.

I remember an article back in 2011 that predicted that we would be able to automate all middle and most upper management jobs by 2015. My immediate thought was, “Well these people must not do much, if a glorified script can replace them.”

Yeah, other than CFO and most* CTOs, anyone in the C-suite is easily replaceable by an LLM. Hell, the CEO could be replaced by a robot arm holding a magic 8-ball with no noticeable difference in performance.

* Probably not the majority, but I’ll be generous.

That’s the whole point of the bubble: convincing investors and CEOs that AI will replace all workers. You don’t need to convince the workers: they don’t make decisions and an awful lot of CEOs have such a high opinion of themselves that they assume any feedback from below is worthless.

Not just a high opinion of themselves, they think everyone is as self-centered as they are, and any claims about needing human workers for the task by human workers is just self-serving and not caring about the work.

AI has been excellent at teaching me to program in new languages. It knows everything about all languages - except the ones I’m already familiar with. It’s terrible at those.

The breadth of knowledge demonstrated by Al gives a false impression of its depth.

Generalists can be really good at getting stuff done. They can quickly identify the experts needed when it’s beyond thier scope. Unfortunately over confident generalists tend not to get the experts in to help.

This makes a lot of sense. A good lesson even outside the context of AI.

I just focus on the parts of what I do know that AI can help me with, not try to say AI can replace other people, but not me. That’s some dumb shit.

This has also been coined the Gell-Mann [Amnesia] effect and is perhaps a kind of corollary to the Dunning-Kruger effect: incompetent people fail to recognize competence.

Truly intelligent people respect the work of professionals and experts in other fields. Or maybe, this is even fundamentally a respect problem.

let’s not confuse LLMs, AI, and automation.

AI flies planes when the pilots are unconscious.

automation does menial repetitive tasks.

LLMs support fascism and destroy economies, ecologies, and societies.

I’d even go a step further and say your last point is about generative LLMs, since text classification and sentiment analysis are also pretty benign.

It’s tricky because we’re having a social conversation about something that’s been mislabeled, and the label has been misused dozens of times as well.

It’s like trying to talk about knife safety when you only have the word “pointy”.

It’s like trying to talk about knife safety when you only have the word “pointy”.

holy shit yes! it’s almost like the corpos did it that way so they can just move the goalposts when the bubble pops.

I generally assume intent that’s more shallow if it’s just as explanatory. It’s the same reason home appliances occasionally get a burst of AI labeling. “Artificial intelligence” sounds better in advertising than “interpolated multi variable lookup table”.

It’s a type of simple AI (measure water filth from an initial rinse, dry weight, soaked weight, and post spin weight, then find the average for the settings from preprogrammed values.), but it’s still AI.Biggest reason I think it’s advertising instead of something more deliberate is because this has happened before. There’s some advance in the field, people think AI has allure again and so everything gets labeled that way. Eventually people realize it’s not the be all end all and decide that it’s not AI, it “just” a pile of math that helps you do something. Then it becomes ubiquitous and people think the notion of calling autocorrect AI is laughable.

My favourite ‘will one day be pub trivia’ snippet from this whole LLM mess, is that society had to create a new term for AI (AGI), because LLMs muddied the once accurate term.

accountants rage silently intensifies

Just wait until they create artificial people and autonomous robots.

No accounting acronym is safe from their tyranny.

I have AGI every year…

To be fair, AI was still underwhelming compared to what people imagined AI to be, it’s just that LLM essentially swore up and down that this is the AI they had been waiting for, and that moved the goalposts to have to classifiy ‘AGI’ specifically.

Which explains why C-suites push it so hard for everyone

Well, they do have the one job that actually can be replaced by “AI” (though in most cases it’d be more beneficial to just eliminate it altogether).

Which is acting like they know everything about everyone else’s jobs, while making up wholly inaccurate assumptions

It’s why managers fucking love GenAI.

My personal take is that GenAI is ok for personal entertainment and for things that are ultimately meaningless. Making wallpapers for your phone, maps for your RPG campaign, personal RP, that sort of thing.

‘I’ll just use it for meaningless stuff that nobody was going to get paid for either way’ is at the surface-level a reasonable attitude; personal songs generated for friends as in-jokes, artwork for home labels, birthday parties, and your examples… All fair because nobody was gonna pay for it anyway, so no harm to makers.

But I don’t personally use them for any of those things myself though, some of my reasons: I figure it’s just investor-subsidized CPU cycles burning power somewhere (environmental), and ultimately that use-case won’t be a business model that makes any money (propping the bubble), it dulls and avoids my own art-making skills which I think everyone should work on (personal development atrophy), building reliance on proprietary platforms… so I’d rather just not, and hopefully see the whole AI techbro bubble crash sooner than later.

I figure it’s just investor-subsidized CPU cycles burning power somewhere (environmental)

This can be avoided by using local open-weight models and open source technology, which is what I do.

Yeah, that certainly addresses that issue. I may do the same in the future, just haven’t found the need to do so as yet. For most who lean on AI for the simple tasks mentioned above, they use an AI service rather than a local model.

My personal take is that GenAI is ok for personal entertainment and for things that are ultimately meaningless

Were you previously much more pro-GenAI, or am I misremembering?

No that was always my position

deleted by creator

I’m in a nightmare scenario where my new job has a guy using Claude to pump out thousands of lines of C++ in a weekend. I’ve never used C++ (just C for embedded devices).

He’s experienced, so I want to believe he knows what he’s doing, but every time I have a question, the answer is “oh that’s just filler that Claude pumped out,” and some copy pasted exposition from Claude.

So I have no idea what’s AI trash and what’s C++ that I don’t know.

Like a random function was declared as a template. I had to learn what function templates are for. So I do, but the function is only defined once, and I couldn’t think of why you would need to templatize it. So I’m sitting here barely grasping the concept and syntax and trying to understand the reasoning behind the decision, and the answer is probably just that Claude felt like doing it that way.

That’s just what C++ people do. They are all equally mad. I am not even joking.

Can you elaborate? This is my first time dealing with higher level languages in the workplace (barring some Python scripts), and I feel like I’m losing my mind.

Ask a C++ programmer to write “Hello World” and they’ll start by implementing a new string type that maybe saves half a CPU instruction when compiled for a very specific CPU but the code can’t be read by anyone else or themselves in 6 weeks

Ah. So basically FizzBuzz Enterprise.

Your coworker is mistreating Claude and this story wants me to call CPS.

Claude will come up with all kinds of creative ideas and that’s neat, but you really need to reign it in to make it useful. Use Claude’s code as a suggestion, cut out the stuff that’s over the top – explain why you did that to Claude, it will generally get it. Add it to your CLAUDE.md if it’s a repeat issue.

Claude will

come up withsurface all kinds ofcreativeeother people’s ideas and that’s neat, but you really need to reign it in to make it useful. Use Claude’s code as a suggestion, cut out the stuff that’s over the top – explain why you did that to Claude, it will generallyget itincorporate that into future prompts. Add it to your CLAUDE.md if it’s a repeat issue.FTFY

Thanks.

Thank you. Dude checked in a shit load of code before going on PTO for three weeks. We get pretty live plots of data, but he broke basically every hardware driver in the process.

That’s actually terrifying. Code has been degrading a lot in the past 10 years or so, and it looks like the LLM trend for code is doing more harm than good. I don’t think that needs to be the case but it appears to be the case.

Like, Microsoft’s CEO bragging that 25% of all their code was written by AI, and that was a year plus ago now so it’s probably higher at this point… I don’t find that reassuring, it’s part of the reason I won’t let my Windows workstation upgrade to 23H2 and I’ve almost completely converted to Linux at home (I keep the laptop running my simulator games on Windows because peripherals can be a pain to get working right without the manufacturer’s software, but it’s also locked to 23H2)

And I’ve used AI to assist me with writing code.

But that’s the distinction: it assists me, it doesn’t write it for me. If I don’t understand how or why something works or why I would do it this way, I’m not using it in production. Far too many seem to be checking out, though, and telling their GPT to take the wheel, and that’s where I think one of the biggest issues comes in.

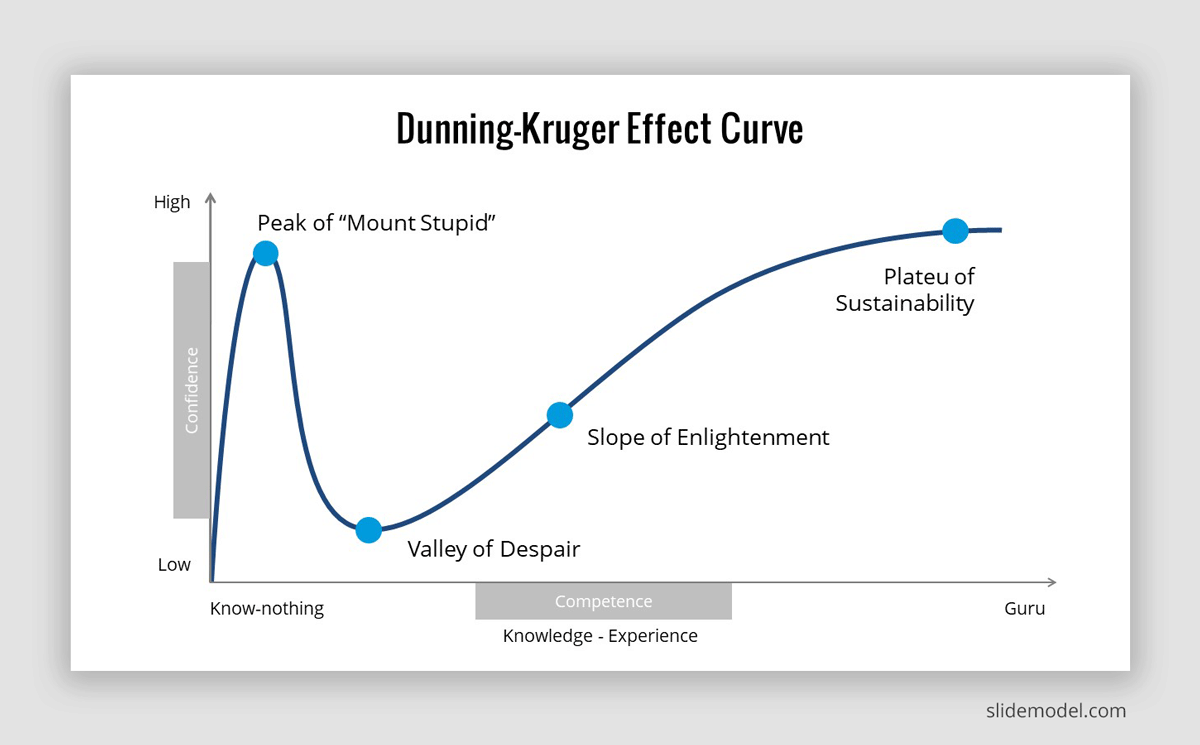

Yeah that’s Dunning-Kruger in a nutshell. Kind of scary that almost everyone in leadership positions sits atop the peak of “Mount Stupid” for most of the things they make decisions about.

Feel like the plateau of sustainability is too high. Being supremely competent in a field can’t compete with the obnoxious confidence of the idiots…

Interestingly, we all sit somewhere near the peak of “Mount Stupid” on nearly every decision we make in a given day. Btw, how long is yogurt good for in the fridge?

The difference is, the more “leadership” you get, the more isolated you are from getting reality shoved in your face. I think being a billionaire is actually a form of brain damage. They never get feedback on when they are wrong, or if they do, they are surrounded by sycophants who will tell them the critic is wrong. The rest of us at least get humbled once in a while.

Good point. When I go “how hard can it be?” and try to fix my car by myself - I inevitably end up eating humble pie and paying a mechanic.

When CEOs lay off half the company, they cash out their stock and get hired to run another company before they see any consequences.

Choosing a screw. Pretty straightforward, right? It’s not. What forces are involved? What materials the screw and the surface are made of? What conditions will it be exposed to?

playing bass guitar-- there’s an exception. it’s exactly as difficult as it looks

Pretty difficult then? I’ve heard some jazz bass guitarists that were insane

low end and rhythm-- that’s what the bass guitar is there for. you can still be technically officially a competent “bassist” without any of the fancy technical embellishments that great bass players employ.

but yea, not to disparage the bass guitar at all, but the basics of matching the kick drum and chord progression and the physical chops of actually playing the thing doesn’t take that long to get a handle on

edit: check out les claypool and primus for an example of some rare bass-centric music

Counterpoint: time. Even playing simple lines, there’s a big difference between a groove that is completely locked in, and one that is not. And that difference is all about the precise timing of the hits between the players in the rhythm section. The bass sets the foundation of all of that.

Simple bass line, anyone can play this.

Life is hard for a musician. For a bassist it’s nearly impossible.

I basically assume every aspect of the work my friends do is insanely difficult and they have to put in effort convincing me certain parts are stupid easy that even a child could do it.

almost everything is more difficult and more complex than I initially assume it is.

Try being born rich.

That’s also why the billionaires love it so much:

they very rarely have much if any technical expertise, but imagine that they just have to throw enough money at AI and it’ll make them look like the geniuses they already see themselves as.

That and it talks to them like every jellyfish yes man that they interact with.

Which subsequently seems to be why so many regular ass people like it, because it talks to them like they’re a billionaire genius who might accidentally drop some money while it’s blowing smoke up their ass.

I literally have to give my local LLM a bit of a custom prompt to get it to stop being so overly praising of me and the things that I say.

Its annoying, it reads as patronizing to me.

Sure, everyonce in a while I feel like I do come up with an actually neat or interesting idea… but if you went by the default of most LLMs, they basically act like they’re a teenager in a toxic, codependent relationship with you.

They are insanely sycophantic, reassure you that all your dumbest ideas and most mundane observations are like, groundbreaking intellectual achievements, all your ridiculous and nonsensical and inconsequential worries and troubles are the most serious and profound experiences that have ever happened in the history of the universe.

Oh, and they’re also absurdly suggestible about most things, unless you tell them not to be.

… they’re fluffers.

They appeal to anyone’s innate narcissism, and amplify it into ego mania.

Ironically, you could maybe say that they’re programming people to be NPCs, and the template they are programming to be, is ‘Main Character Syndrome’.

billionaires love it

They think it knows everything because they know nothing.

Which ironically means that they are the easiest people to replace with AI.

… They just… get to own them.

For some reason.

So the only real business model here is for people to be able to produce things they are not qualified to work on, with an acceptable risk of generating crap. I don’t see how that won’t be a multi-trillions dollars market.

Investors are rarely experts in the particular niches that the companies they hold shares in are applying AI to.

produce things they are not qualified to work on, with an acceptable risk of generating crap

You just described the C-suite at most major companies.

So Gen AI is like Dan Brown, the more you know about the subject the more it sucks

Or fiction in general…

Watch a doctor’s opinion on most medical shows…

A computer expert’s opinion on most ‘hacking scenes’…

A lawyer on any legal drama…

IDK about that I’m a professional slop maker and I think it could replace me easily.

Ignorance and lack of respect for other fields of study, I’d say. Generative ai is the perfect tool for narcisists because it has the potential to lock them in a world where only their expertise matters and only their oppinion is validated.