We’re aware of ongoing federation issues for activities being sent to us by lemmy.ml.

We’re currently working on the issue, but we don’t have an ETA right now.

Cloudflare is reporting 520 - Origin Error when lemmy.ml is trying to send us activities, but the requests don’t seem to properly arrive on our proxy server. This is working fine for federation with all other instances so far, but we have seen a few more requests not related to activity sending that seem to occasionally report the same error.

Right now we’re about 1.25 days behind lemmy.ml.

You can still manually resolve posts in lemmy.ml communities or comments by lemmy.ml users in our communities to make them show up here without waiting for federation, but this obviously is not something that will replace regular federation.

We’ll update this post when there is any new information available.

Update 2024-11-19 17:19 UTC:

Federation is resumed and we’re down to less than 5 hours lag, the remainder should be caught up soon.

The root cause is still not identified unfortunately.

Update 2024-11-23 00:24 UTC:

We’ve explored several different approaches to identify and/or mitigate the issue, which included replacing our primary load balancer with a new VM, updating HAproxy from the latest version packaged in Ubuntu 24.04 LTS to the latest upstream version, finding and removing a configuration option that may have prevented logging of certain errors, but we still haven’t really made any progress other than ruling out various potential issues.

We’re currently waiting for lemmy.ml admins to be available to reset federation failures at a time when we can start capturing some traffic to get more insights on the traffic that is hitting our load balancer, as the problem seems to be either between Cloudflare and our load balancer, or within the load balancer itself. Due to real life time constraints, we weren’t able to find a suitable time this evening, we expect to be able to continue with this tomorrow during the day.

As of this update we’re about 2.37 days behind lemmy.ml.

We are still not aware of similar issues on other instances.

this comment section is not a place to rant about other instances

Yeah a swept clean comment section looks way better…

I don’t see why not.

I’ll just post sentence instead of a rant then, defederate from .ml please :3

The hypocracy of people exaggerating the scale of the problems and piling-on about other instances in their manic drive to turn this place into an echo chamber is depressing.

It’s been far better the past few days without them.

Honestly some of the discussions I have with lemmy.ml people don’t make sense in any capacity

I haven’t really had that experience with anyone from .ml yet. There are a handful of actual tankies there but for the most part, from want I have seen, it’s mostly various forms of far left opinion which no one should be afraid of.

Some people here do spend an unseemly amount of time complaining about them. Which is more toxic than anything they’re usually complaining about.

I had interactions like this person: https://lemmy.world/comment/13434977

A lot of them seem to be like the crazy Facebook crowd (not all).

But that being said, unfortunately, as Lemmy grows, I’m seeing more of that in general where we slowly move away from a “scientific” oriented approach, to half-truths and biased information.

So you’re probably right.

But yeah, people do complain a disproportionate amount about them (I feel like a lot of people screaming “tankie”, are similar to the trump crowd people)…

That whole argument was painful to read. By the end he was so frustrated he became very obnoxious. Not cool.

I didn’t get the sense of someone deliberately sowing misinformation however. Being misinformed isn’t a dealbreaker nor is a poor rhetorical style.

I think it is important to continue forward with users with a wide range of sources. None of us have a monopoly on the truth and all of our ‘trusted’ sources palter and decontextualise their facts to some degree.

I know it’s probably an unrealistic expectation but I’d rather mods, in general, had higher standards for curating civility in discussions.

“Echo chamber” is often used as a criticism on the internet. But in real life, when you make friends, it’s with like-minded people, right? So maybe it makes sense to seek online spaces with like-minded people, rather than spaces with people of various views attacking and insulting each other.

It’s a reasonable argument for giving you tools for tailoring your own feed but not for censoring entire groups out.

It makes sense.

Not everyone on .ml wants to eat your dog. Just as not everyone on .world is Trolleyman.

I’d say that the majority of people on the fediverse are capable of having a civil conversation on most topics. Some might have a handful issues that they dig their heels in over but can be civil if you comport yourself respectfully. And then there are a tiny minority of narcissistic zealot idiologues that try to maintain a narrow normative pressure on permissable opinions.

Remember folks.

This is how they infiltrate circles and twist them to right wing authoritarianism and extremism

They send in someone who uses a soft approach as their opening gambit, talking about how they are good people, and how they don’t deserve to be excluded, and its really unfair to judge them for what they and their associates do, and that if you were really as tolerant and as leftist as you said you were you’d accept them, and engage with them, and sup with them.

While handwaving any of the actual, legitimate, pointed criticisms as the actions of a “tiny minority of zealots”

Its death by a thousand cuts, and what Hamartia here is doing is trying to do the first tiny cut. To try to make you feel unreasonable, and possibly even bigoted, for taking a stand againts the poor, innocent nazis/tankies/etc.

You know why this hasnt been a significant problem until this past decade? Cause these people were punched in the mouth the second they started "Well, Ackshually~"ing in the defense of right wing fascist extremism. The second they started to fly their freak flags. Its why they have a whole underculture of co-opted symbology and secret coded language, because they were scared and had to hide, and needed a way to recognize eachother without risking a baseball bat to their jaw.

And the reason we stopped doing that, is because this soft handed approach of theirs worked. Its why they dropped the coded language. Its why their freak flags are flying high and proud now. Its why they are openly, gleefully saying their absolute horrid, unfiltered hated with gleeful abandon now. Because what Hamartia is doing, worked. It got their foot in the door, and as soon as it was there, they crushed in with the entire party and took over.

You can see where we are today, and its because we let their faux-reason, concern trolling, and paly acting exploit our tolerance.

And you see what its gotten us

And you see why its better to go back to the old classics of punching these motherfuckers in the mouth.

So don’t let this soft hand propaganda being done by Hamartia and others sway you. Don’t let it make you feel unreasonable, or uncivil. They are manipulating you to open the door for the ones behind them that arent capable of feigning and playacting “polite” discourse in attempt to make you feel like you’re the bad guy.

These people have no place in a polite and tolerate society. They are a cancer that seeks to destroy it, by exploiting our beliefs against ourselves. Its not paradoxical. Its not hypocritical. Its not whatever bullshit attempt they make next at trying to twist tolerance into a dagger for them to use to stab tolerance itself in the back and kill it to their extreme benefit, and to societies great loss.

This is all patent nonsense.

True. People out here fighting leftists like their life depends on it

“leftists”

Please defederate from them.

Because they’re tankies, and I really don’t need to explain to you why that’s a bad thing. You already know.

I don’t kmow what tankie means

Don’t worry, neither does he.

No seriously. I see the term used in memes and I don’t know what it means.

A tankie is someone who sided with the authoritian government and their tanks against the one guy who stood in front of them in Tianenman Square.

They say they’re communists but defend an authoritarian oligarchy…

Authoritarians say they’re communist because they hate communism and don’t want people to think it’s a good idea. So they call everyone on the left a tankie.

It’s a stupid label and 99% of the people throwing it around don’t understand anything about it and are just lying about what communism is.

Oh wow! Haha ok thanks. From context clues it seemed like it could mean anyone.

Here’s a Wikipedia article that explains what a “Tankie” is.

TL;DR - It’s a slur to refer to authoritarian communists, originally used to speak out against people who supported using tanks to suppress dissidents.

Generally, anyone who supports aggression or violence to silence or oppress people are referred to as Tankies.

No, being a tankie isn’t only about supporting aggression / silencing people, it is specifically about romanticizing communism as some great philosophy that solves every problem and in extension sucking the D of Russia and China, while going “every other country bad” to the point of complete insanity.

Otherwise rightwing US are tankies.

Block the instance on your own client, not all of us are as closed minded as you are.

Ookie dookie

Ah, I just today in the morning blocked Lemmy.ml. Seems to have been interesting timing :)

I’ve never had a positive interaction with Lemmy.ml. For me it serves as a quarantine space, and a set of pre-tagged users I don’t personally enjoy dealing with.

…and I’m not particularly averse to Marxists sentiments either, but they’re certainly not good sales people, diplomats, or representative of their cause.

Which is just part of their reputation now. Having a bad experience with a .ml user seems to be part of the lemmy experience. It’s kind of comical how consistent it seems.

That said, I’m sure there’s good people on .ml.

Yeah, just avoid politics there and it’s fine. We all know about their zeal so it’s pointless to discuss it.

Is there a reason people seem to not like .ml? I only joined because it said the instance was for FOSS enthusiasts

Wow that’s crazy, thank you and the other person for informing me

Yes. Because it isn’t for FOSS enthusiasts… They use the .ml specifically to refer to an oppressive, violent, ignorant political ideology. Every bit as bad as capitalism. That’s a threat to anyone that disagrees with them left or right.

A lot of older established communities are there by the circumstance of it being the oldest server. And not by any other virtue. In fact, there are a number like the KDE project that have their own instance. Completely detached from political ideology. Which is a wise decision. A lot of official projects don’t want to be associated with the regular hypocritical and disparaging remarks of the admin staff there

I’ve been a foss enthusiast since the late 80s early 90s when I was in college. Used Linux since 94. Dabbled in BSD a bit before. Am solidly towards the anarchist left. And I block ML on principle alone. Authoritarians aren’t allies. And access to open source communities shouldn’t hinge on not accidentally crossing the fragile and hypocritical political ideologies of such groups. No place or group is perfect. But few are so flawed out of the gate.

Yikes! Thank you for writing this out, I had no idea. Fk those .ml tankies

Russians

So it’s your fault!

In which case, thank you.

No one said it was a global variable. You can hardly blame the user for poor documentation.

I mean…if you wanted to defederare from lemmy.ml I’d be fine with that.

There’s too much content on lemmy.ml to defederate. We’d lose like a quarter of all the content.

We’d lose the .ml version of that content.

But I thought the whole point of federation was that if mods, or communities become problematic, you could always create your own. Then all the non-problematic people will move to that.

Is that not the very foundation of the concept of the fediverse?

In order for that to work there needs to be effort taken to curb persistent network effect like the utterly monolithic communities on lemmy.ml. When there isn’t any, they just get bigger and stronger. That isn’t something you can do by starting new instances, it has to be done by ones with a big slice of the pie, something new instances never have unless started just after the collapse of an existing one.

It’s not wrong, but I don’t regard Lemmy.ml as being problematic enough to defederate from. Their moderation practices are questionable and their user base is annoying but it’s otherwise generally tolerable. People can block the instance if they don’t want to see content from it.

It would be nice to have a simple way to block the user base too

Would need to be one that restricts participation of the other party, Lemmy blocks are useless in that regard. Otherwise you’re not doing anything to deal with network effect just pretending those spaces don’t exist. Something only useful for snowflakes with weak emotions, and not people who want to make a difference.

We would adapt, just like when Beehaw was a significant amount of the userbase on Lemmy and they cut us and sh.itjust.works off, we adapted and they got smaller. Lemmy.world is a bigger server than lemmy.ml. Only reason their communities are still so big is network effect. Which would be curbed by them being cut off. As I’ve said already, network effect is curbed by force, taking away a choice, not providing 7 more choices while leaving the original.

It’s certainly an efficient way to resolve the problem.

I wouldn’t.

Lemm.ee is not loading at all, giving a 503 error. Possibly related?

This probably should explain: https://lemmy.world/post/22166289

There’s actually been some issues with Reddthat as well, not seeing users ect

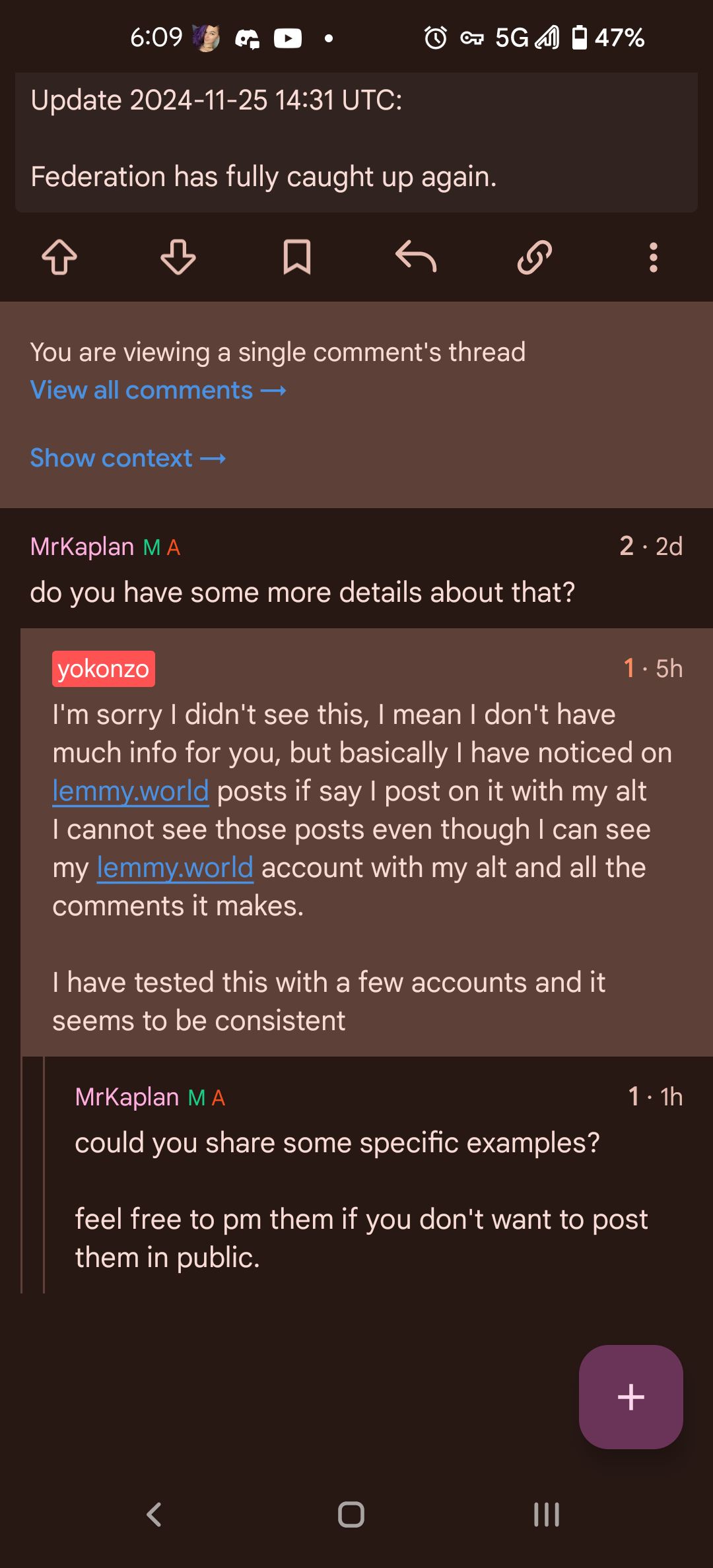

do you have some more details about that?

I’m sorry I didn’t see this, I mean I don’t have much info for you, but basically I have noticed on lemmy.world posts if say I post on it with my alt I cannot see those posts even though I can see my lemmy.world account with my alt and all the comments it makes.

I have tested this with a few accounts and it seems to be consistent

could you share some specific examples?

feel free to pm them if you don’t want to post them in public.

Like it wasn’t even posted as shown here, I noticed it about In July or so

i still didn’t understand what you were referring to, but now that i looked at this comment thread on reddthat.com I can see that the other account that commented here is banned from lemmy.world: https://lemmy.world/modlog?userId=1250220

the justification for the ban is just “spam”, which unfortunately doesn’t provide much context, and I don’t see anything immediately obvious that’d justify it. especially considering that it’s been a year since the ban, it was likely not necessary to issue a permanent ban for that. i’ve unbanned your reddthat account now.

I too would love to know what your experiencing (so I can fix it!)

Oh, come on. Again?

The Usual suspects, Network troubleshooting editon

FUCK active directory

Ok, but who are you marrying and killing?

Do these things usually happen from time to time?

I’ve noticed some lemmy.ml communities looking surprisingly “dead” some days here and there but not thought much of it.

I wouldn’t say usually, but they can happen from time to time for a variety of reasons.

It can be caused by overly aggressive WAF (web application firewall) configurations, proxy server misconfigurations, bugs in Lemmy and probably some more.

Proxy server misconfiguration is a common one we’ve seen other instances have issues with from time to time, especially when it works between Lemmy instances but e.g. Mastodon -> Lemmy not working properly, as the proxy configuration would only be specifically matching Lemmys behavior rather than spec-compliant requests.

Overly aggressive WAF configurations tend to usually being a result of instances being attacked/overloaded either by DDoS or aggressive AI service crawlers.

Usually, when there are no configuration changes on either side, issues like this don’t just show up randomly.

In this case, while there was a change on the lemmy.ml side and we don’t believe a change on our side fell into the time this started happening (we don’t have the exact date for when the underlying issue started happening), while the behavior on the sending side might have changed with the Lemmy update, and other instances might just randomly not be affected. We currently believe that this is likely just exposing an issue on our end that already existed prior to changes on lemmy.ml, except the specific logic was previously not used.

FWIW the communities on walledgarden.xyz have been having federation issues to lemmy.ml for a few days as well.

Since we were/are working through some things with our host I didn’t want to bother anyone from lemmy.ml about it, but it’s a thing. AFAIK federation is otherwise working normally.

Good luck getting it figured out and resolved!

Could it be an issue/compatibility with lemmy.ml running Lemmy v0.19.7 ?

I don’t believe it is.

There weren’t any network related changes from 0.19.6 to 0.19.7 and we haven’t seen this behavior with any of the 0.19.6 instances yet.

The requests are visible with details (domain, path, headers) in Cloudflare, but they’re not showing on our proxy server logs at all.

I’ve read enough posts over at /r/sysadmin, it is always DNS.

deleted by creator

Hmm interesting, so I guess even though I can see this hours old post, my comment should arrive in several days time. Hopefully I haven’t responded to anyone on world with anything important recently.

it arrived a few minutes ago, federation is working again (for now)

I can think of a solution.

Should be a feature, not a bug.