I wonder if my system is good or bad. My server needs 0.1kWh.

Do you mean 0.1kWh per hour, so 0.1kW or 100W?

My N100 server needs about 11W.

The N100 is such a little powerhouse and I’m sad they haven’t managed to produce anything better. All of the “upgrades” are either just not enough of an upgrade for the money, it just more power hungry.

To my understanding 0.1kWh means 0.1 kW per hour.

0.1kWh per hour can be written as 0.1kWh/h, which is the same as 0.1kW.

Thanks. Hence, in the future I can say that it uses 0.1kW?

If this was over an hour, yes. Though you’d typically state it as 100W ;)

Yes. Or 100W.

It’s the other way around. 0.1 kWh means 0.1 kW times 1 h. So if your device draws 0.1 kW (100 W) of power for an hour, it consumes 0.1 kWh of energy. If your

devicefactory draws 360 000 W for a second, it consumes the same amount of 0.1 kWh of energy.Thank you for explaining it.

My computer uses 1kwh per hour.

It does not yet make sense to me. It just feels wrong. I understand that you may normalize 4W in 15 minutes to 16Wh because it would use 16W per hour if it would run that long.

Why can’t you simply assume that I mean 1kWh per hour when I say 1kWh? And not 1kWh per 15 minutes.

kWh is a unit of power consumed. It doesn’t say anything about time and you can’t assume any time period. That wouldn’t make any sense. If you want to say how much power a device consumes, just state how many watts (W) it draws.

Thanks!

A watt is 1 Joule per Second (1 J/s). E.g. Every second, your device draws 1 Joule of energy. This energy over time is called “Power” and is a rate of energy transfer.

A watt-hour is (1 J/s) * (1 hr)

This can be rewritten as (3600 J/hr) * (1 hr). The “per hour” and “hour” cancel themselves out which makes 1 watt-hour equal to 3600 Joules.

1 kWh is 3,600 kJ or 3.6 MJ

the boxes i have running 24/7 use about 20w max each, and about half that at idle or ‘normal’ loads.

AiBot post. Fuck this shit.

deleted by creator

My 10 year old ITX NAS build with 4 HDDs used 40W at idle. Just upgraded to an Aoostart WTR Pro with the same 4 HDDs, uses 28W at idle. My power bill currently averages around US$0.13/kWh.

45 to 55 watt.

But I make use of it for backup and firewall. No cloud shit.

My whole setup including 2 PIs and one fully speced out AM4 system with 100TB of drives a Intel Arc and 4x 32gb ecc ram uses between 280W - 420W I live in Germany and pay 25ct per KWh and my whole apartment uses 600w at any given time and approximately 15kwh per day 😭

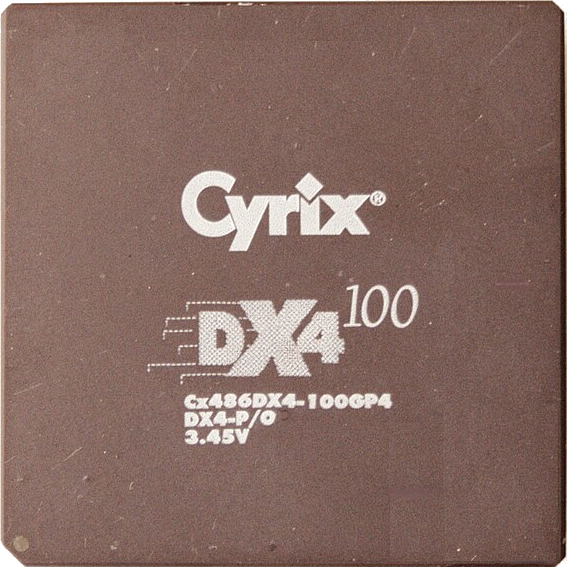

last I checked with a kill-a-watt I was drawing an average of 2.5kWh after a week of monitoring my whole rack. that was about three years ago and the following was running in my rack.

- r610 dual 1kw PSU

- homebuilt server Gigabyte 750w PSU

- homebuilt Asus gaming rig 650w PSU

- homebuilt Asus retro(xp) gaming/testing rig 350w PSU

- HP laptop as dev env/warmsite ~ 200w PSU

- Amcrest NVR 80w (I guess?)

- HP T610 65w PSU

- Terramaster F5-422 90w PSU

- TP-Link TL-SG2424P 180w PSU

- Brocade ICX6610-48P-E dual dual 1kw PSU

- Misc routers, rpis, poe aps, modems(cable & 5G) ~ 700w combined (cameras not included, brocade powers them directly)

I also have two battery systems split between high priority and low priority infrastructure.

I was drawing an average of 2.5kWh after a week of monitoring my whole rack

That doesn’t seem right; that’s only ~18W. Each one of those systems alone will exceed that at idle running 24/7. I’d expect 1-2 orders of magnitude more.

IDK, after a week of runtime it told me 2.5kwh average. could be average per hour?

Highest power bill I ever saw was summer of 2022. $1800. temps outside were into to 110-120 range and was the hottest ever here.

maybe I’ll hook it back up, but I’ve got different (newer) hardware now.

Ugh, I need to get off my ass and install a rack and some fiber drops to finalize my network buildout.

80-110W

17W for an N100 system with 4 HDD’s

Which HDDs? That’s really good.

Seagate Ironwolf “ST4000VN006”

I do have some issues with read speeds but that’s probably networking related or due to using RAID5.

That’s pretty low with 4 HDD’s. One of my servers use 30 watts. Half of that is from the 2 HDD’s in it.

@meldrik @qaz I’ve got a bunch of older, smaller drives, and as they fail I’m slowly transitioning to much more efficient (and larger) HGST helium drives. I don’t have measurements, but anecdotally a dual-drive USB dock with crappy 1.5A power adapter (so 18W) couldn’t handle spinning up two older drives but could handle two HGST drives.

Between 50W (idle) and 140W (max load). Most of the time it is about 60W.

So about 1.5kWh per day, or 45kWh per month. I pay 0,22€ per kWh (France, 100% renewable energy) so about 9-10€ per month.

Are you including nuclear power in renewable or is that a particular provider who claims net 100% renewable?

Net 100% renewable, no nuclear. I can even choose where it comes from (in my case, a wind farm in northwest France). Of course, not all of my electricity, but I have the guaranty that renewable energy bounds equivalent to my consumption will be bought from there, so it is basically the same.

Thanks. I buy Vattenfall but make net 2/3rds of my own power via rooftop solar.

Idles at around 24W. It’s amazing that your server only needs .1kWh once and keeps on working. You should get some physicists to take a look at it, you might just have found perpetual motion.

.1kWh is 100Wh

Good point. Now it does make sense. I know the secret to the perpetual motion machine now.

I ate sushi today.

This is a factual but irrelevant statement

Around 18-20 Watts on idle. It can go up to about 40 W at 100% load.

I have a Intel N100, I’m really happy about performance per watt, to be honest.

Is there a (Linux) command I can run to check my power consumption?

Get a Kill-a-Watt meter.

Or smart sockets. I got multiple of them (ZigBee ones), they are precise enough for most uses.

If you have a laptop/something that runs off a battery,

upowerIf you have a server with out-of-band/lights-out management such as iDRAC (Dell), iLO (HPe), IPMI (generic, Supermicro, and others) or equivalent, those can measure the server’s power draw at both PSUs and total.

My server uses about 6-7 kWh a day, but its a dual CPU Xeon running quite a few dockers. Probably the thing that keeps it busiest is being a file server for our family and a Plex server for my extended family (So a lot of the CPU usage is likely transcodes).

Pulling around 200W on average.

- 100W for the server. Xeon E3-1231v3 with 8 spinning disks + HBA, couple of sata SSD’s

- ~80W for the unifi PoE 48 Pro switch. Most of this is PoE power for half a dozen cameras, downstream switches and AP’s, and a couple of raspberry pi’s

- ~20W for protectli vault running Opnsense

- Total usage measured via Eaton UPS

- Subsidised during the day with solar power (Enphase)

- Tracked in home assistant